Firebase Retention Cohort Query on BigQuery

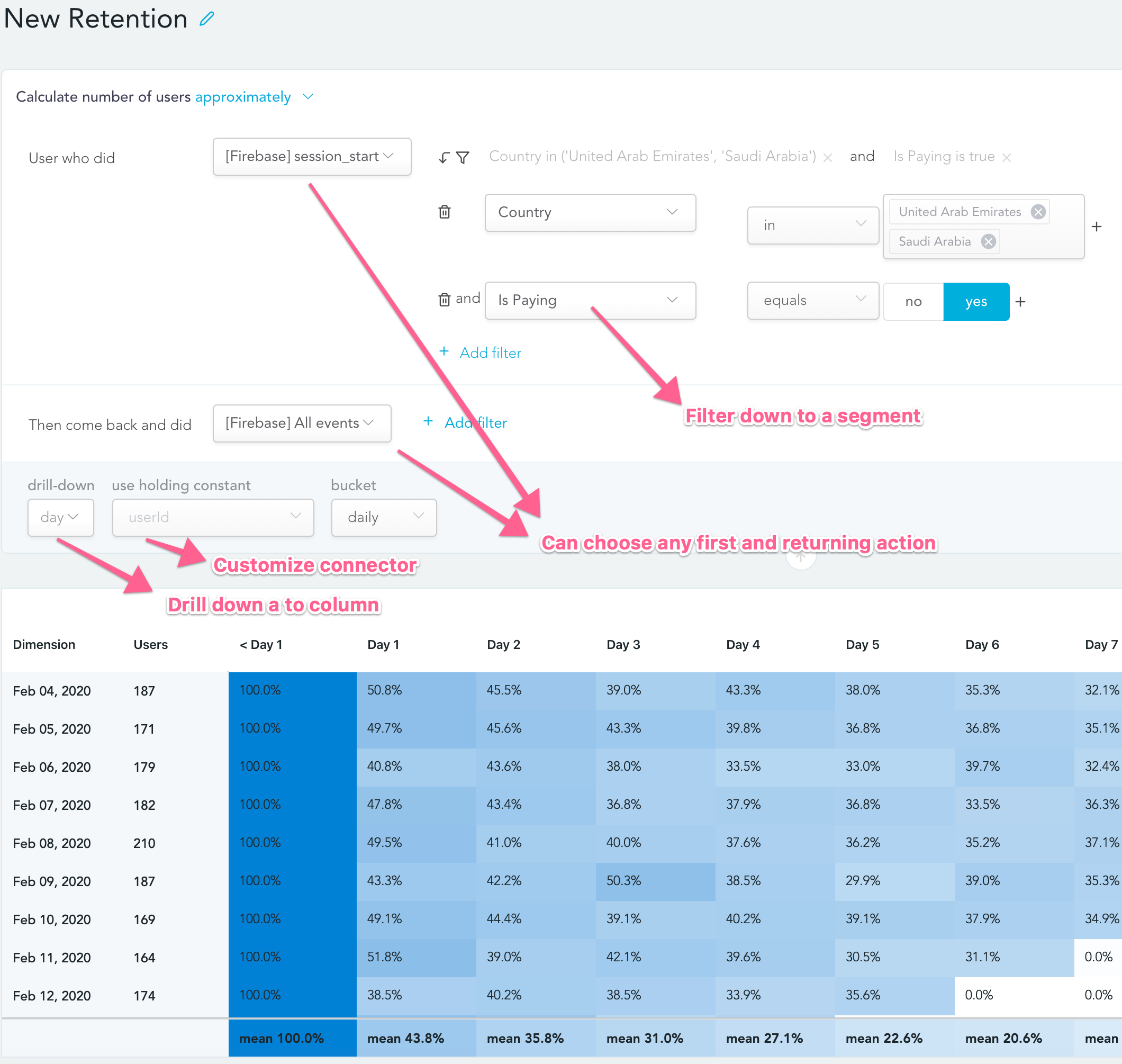

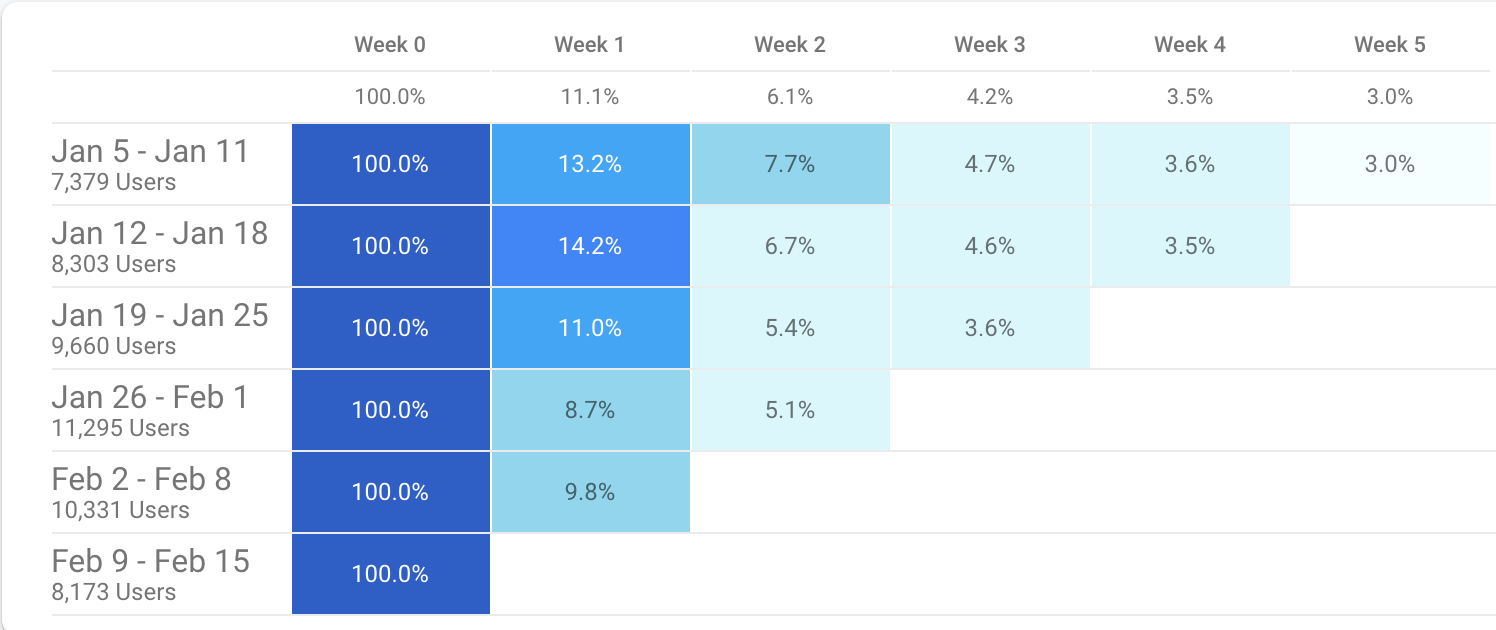

The cool features aside, Firebase is also a powerful analytics platform with built-in funnel and retention reports. You can filter your user segment on various dimensions such as platform, stream, demographics or custom user properties. Firebase can also attribute your installations marketing channels down to the ad-set and creatives. Although this power feature is free of charge there are some caveats such as Apple Search Ads and Facebook Ads that are not supported.If you’re focused more on marketing conversions, you can even filter your user segment on marketing data, if you’ve integrated your marketing channel.

TL;DR Composing an efficient retention query on BigQuery using probabilistic cardinality estimation function: HyperLogLog++, if you’re here for this jump here. No idea about Firebase’s canonical data structure on BigQuery, here

You can get answers to many questions with the builtin report that the UI provides you. But if you have the following needs, you need a bootstrap.

- Realtime data.

- Ability to filter on all event attributes.

- Filter the segment using external data (I.e: Marketing attributions from Adjust, S2S events from BigQuery).

- Select first and returning action.

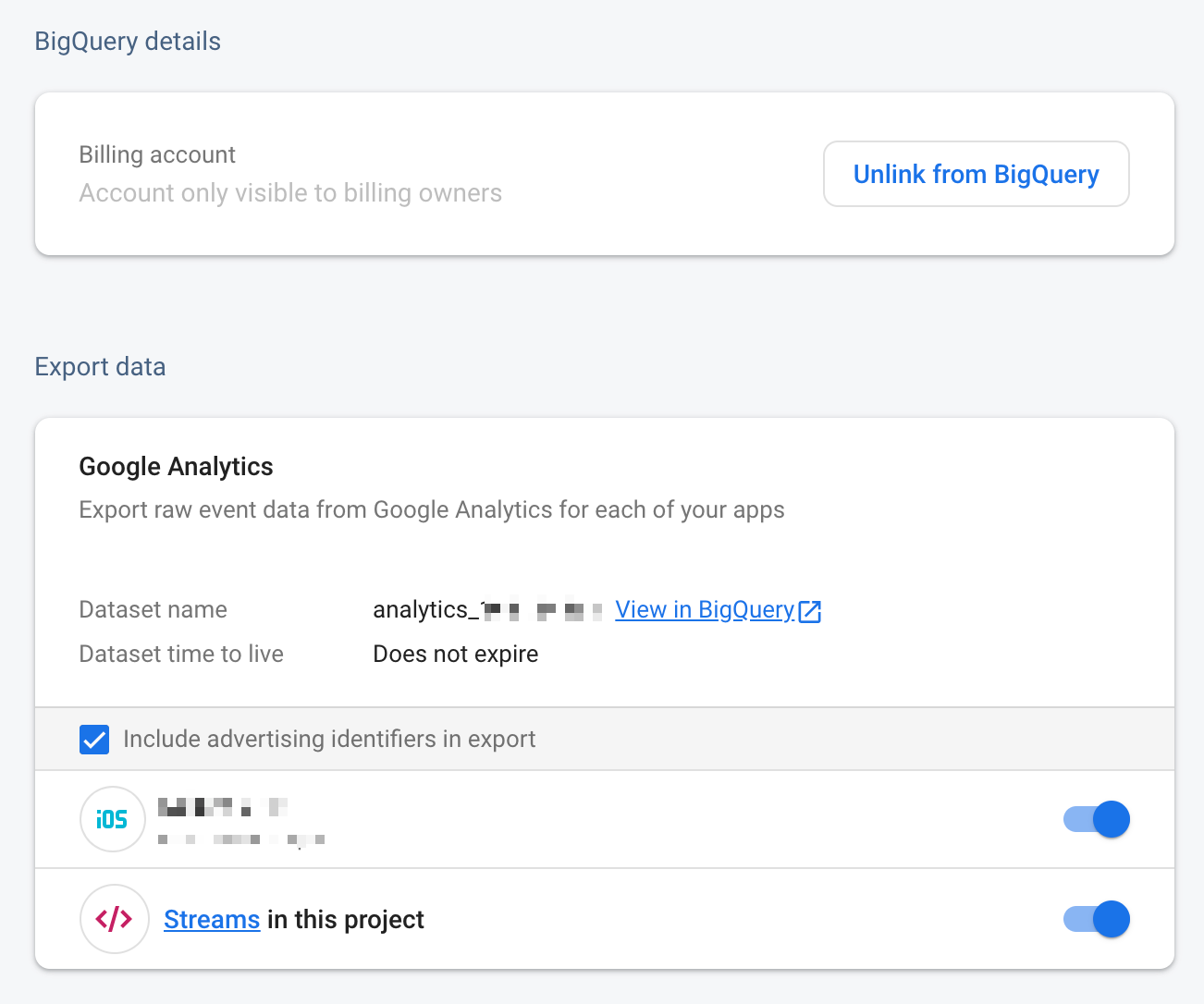

Firebase only lets you create a retention report from the New User segment, also you can not select a returning action. For example, I’d like to see the retention cohort of All Users performed any event, come back and did Checkout event. For such a detail report, you’ll have to work on the SQL level. Luckily, Firebase can export your event data to your BigQuery project in real-time. Just navigate to Settings -> Integrations -> BigQuery and enable the BigQuery export feature.

The BigQuery schema of Firebase is in Canonical form, a single table will be holding all your events as rows, user and event properties as an array of records in columns. For more details check out our previous post. You have linked your Firebase data and got a basic understanding of how your data is stored in BigQuery. Now it is time to go in deeper.

Composing Retention Report in SQL

Let’s start with the simplest, the below query declares first and section. You may filter it on top-level properties (country, device, etc), or on user & event parameters (you have it unnest it first.). Be sure to filter your segment with _TABLE_SUFFIX. Otherwise, it will be an expensive query.

WITH FIRST_ACTION AS (

SELECT user_id as connector, min(timestamp_trunc(TIMESTAMP_MICROS(event_timestamp), DAY)) as date

FROM `dataset.analytics_project_id.events_*`

WHERE _TABLE_SUFFIX BETWEEN '20200201' AND '20200210' AND event_name = 'session_start'

GROUP BY 1

),

RETURNING_ACTION AS (

SELECT user_id as connector, timestamp_trunc(TIMESTAMP_MICROS(event_timestamp), DAY) as date

FROM `dataset.analytics_project_id.events_*`

WHERE _TABLE_SUFFIX BETWEEN '20200201' AND '20200210' AND event_name = 'session_start'

)

Once you filtered your first and returning action segments, it is time to generate the cohort. What need here is simply;

- First appear date.

- Next period (in days, weeks or months depending on how you truncated the event_timestamp column).

- The number of users, for (first appear date + next period).

SELECT date, null as next_period, COUNT(DISTINCT connector) as users

FROM FIRST_ACTION

GROUP BY 1

UNION ALL

SELECT FIRST_ACTION.date, TIMESTAMP_DIFF(RETURNING_ACTION.date, FIRST_ACTION.date, DAY), COUNT(DISTINCT RETURNING_ACTION.connector) AS users

FROM FIRST_ACTION JOIN RETURNING_ACTION ON TIMESTAMP_DIFF(RETURNING_ACTION.date, FIRST_ACTION.date, DAY) BETWEEN 0 and 10 AND FIRST_ACTION.connector = RETURNING_ACTION.connector

GROUP BY 1, 2

ORDER BY 1, 2

First appear date is trivial, just group by the whole first action on your date column and count your connector. To find the number of users in the next period, we have to join the returning action on the connector column.

If you have relatively small data or don’t care about the speed/cost optimization, the approach above should be the trick to get answers to all your retention queries. However, this is big data and why not use cool cardinality estimation features 😎

Optimized Retention Query (using HyperLogLog)

Calculating the exact cardinality of big sets can demand high resources, estimating the cardinalities using probabilistic estimators can show significant performance difference compared to the deterministic method. Bigquery has tons of aggregation functions, as for analytics; HyperLogLog++ functions can estimate the cardinality of a set using a probabilistic approach. Check more details for each HLL function here.

The above query’s estimation function can be altered to APPROX_COUNT_DISTINCT to use HyperLogLog estimation. But the join statement is still there, which uses a connector (a high cardinality column) as the join condition.

Let’s ask the question: How do we calculate the number of users in the next period?

The number of users who came back for the next period is basically, the cardinality of intersections of first and returning action for that period, |A⋂B|.Using HLL_COUNT.MERGE_PARTIAL, we can combine two sets of cardinality, |A⋃B|.And we also know that;

`|A⋂B| = |A| + |B| - |A⋃B|

Let’s start with filtering first and returning action, but this time instead of counting the connector, we use HLL_COUNT.INIT function. This will export a binary representation of the cardinality.

WITH FIRST_ACTION AS (

SELECT

TIMESTAMP_TRUNC(TIMESTAMP_MICROS(event_timestamp), DAY) as DATE,

HLL_COUNT.INIT(user_id) as USER_ID_SET

FROM `dataset.analytics_project_id.events_*`

WHERE _TABLE_SUFFIX BETWEEN '20200201' AND '20200210' AND event_name = 'session_start'

GROUP BY 1

),

RETURNING_ACTION AS (

SELECT

TIMESTAMP_TRUNC(TIMESTAMP_MICROS(event_timestamp), DAY) as DATE,

HLL_COUNT.INIT(user_id) as USER_ID_SET

FROM `dataset.analytics_project_id.events_*`

WHERE _TABLE_SUFFIX BETWEEN '20200201' AND '20200210' AND event_name = 'session_start'

GROUP BY 1

),

DET AS (

SELECT

FA.DATE,

TIMESTAMP_DIFF(RA.DATE, FA.DATE, DAY) AS DIFF,

HLL_COUNT.EXTRACT(FA.USER_ID_SET) AS FA_USER_COUNT,

HLL_COUNT.EXTRACT(RA.USER_ID_SET) AS RA_USER_COUNT,

ARRAY[FA.USER_ID_SET, RA.USER_ID_SET] AS USER_ID_ARRAY

FROM FIRST_ACTION FA

JOIN RETURNING_ACTION RA

ON (FA.DATE <= RA.DATE)

),

AGG AS (

SELECT

DET.DATE,

DET.DIFF,

FA_USER_COUNT,

RA_USER_COUNT,

V as VALUE

FROM DET, UNNEST(DET.USER_ID_ARRAY) V

)

If you take a peek to `AGG`, it will show you the binary representation of cardinalities for each day and next period, alongside with estimated cardinalities of first and returning action for that day and next period.

Select * From Agg -> Each date + next period has a binary cardinality representation.

The next stage is to calculate the cardinality of intersections: |A⋂B| = |A| + |B|-A⋃B.

SELECT

DATE,

NULL,

HLL_COUNT.EXTRACT(USER_ID_SET)

FROM FIRST_ACTION

UNION ALL

SELECT

DATE,

DIFF,

(FA_USER_COUNT + RA_USER_COUNT) - HLL_COUNT.EXTRACT(SIM)

FROM (

SELECT

AGG.DATE,

AGG.DIFF,

FA_USER_COUNT,

RA_USER_COUNT,

HLL_COUNT.MERGE_PARTIAL(VALUE) AS SIM

FROM AGG

GROUP BY 1, 2, 3, 4

)

)

HLL_COUNT.MERGE_PARTIAL will combine (union) the cardinalities we grouped the query for, and the upper query will calculate the actual intersection via:

(FA_USER_COUNT + RA_USER_COUNT) — HLL_COUNT.EXTRACT(SIM)

The query can be easily materialized on the date column if you’d like to speed things more up. If you’re looking for an easier solution or more advanced query generation such as filtering your segment via joining another data source, we already handle all the steps and may more in rakam.

About Rakam

Model your data once and enable your non-technical users to get insights themselves. Can’t model data? Install one of our recipes to bootstrap your project, we support Firebase, Rakam-API, Snowplow, Segment, Tenjin, GA360 and much more weekly updated on this repository.