Recipes: Open-source data models for third party integrations

From a small startup to a big enterprise company, people care about your ideas only if you can back them with data. If you’re a marketing person who wants to create marketing campaign that requires you to have enough budget, you probably need to have a hypothesis backed by the data. If you’re a product person, you either ask your users or collect their data in order to be able to decide the features that your developer colleagues will be implementing. In short, data is is the most convenient way to convince people.

However; most of us don’t have enough resources to build up the data infrastructure that we need thus use the SaaS solutions for our needs ranging from marketing, CRM, and product to finance. They provide us the features that we need without too much work thus simplify our workload. The problem is that as our company grows, our needs become more complex therefore the user interfaces of these SaaS tools become inadequate. That’s why most of the well-known SaaS solutions provide us a way to export our data into our database for further analysis. At this point, you usually need to hire a data analyst so that he/she can write the SQL queries for your needs but it takes time for him/her to understand the data layout and answer your questions writing SQL queries and creating the reports that you’re looking for.

Let’s take Segment as an example. It aims to be the data hub in our company and supports hundreds of data sources. The marketing & product departments in our organization pick the tools that they’re going to use and enable it in the Segment UI, it takes care of the rest. There is also an additional product called Segment Warehouses; it sends all your company data into your data-warehouse for further analysis. It’s being used by 66% of their serious customers because most of them don’t want to be limited with the integrations that they’re using. The data-warehouse can answer probably all the questions that you need for reporting but since the tools such as Mixpanel and Amplitude provide a much convenient interface that doesn’t have much learning curve, you just enable them in the Segment UI. In case they can’t answer your question, you get help from the data analyst. He/she learns about the data structure, writes the SQL and shares the report with you.

Most of the product analytics tools such as Heap, Amplitude, and Mixpanel have these integrations in-place but it’s not limited to them. You also have this option in marketing tools such as Adjust, Appsflyer, and Singular. Even if the tool doesn’t have native integration with your data-warehouse there is now a growing market of ETL tools that collects all your company data into your data-warehouse; Segment, Fivetran, and Stitch are just a bunch of them.

Over the last 2 year, we were pushing hard to build a tool in order to consolidate your company data but it took some time for us to figure out that the data collection is already a solved problem with these ETL tools. If you don’t want to use a third party service, the cloud providers such as AWS, GCP or Azure already have a bunch of products for you to build the data pipeline specialized for your use-case. The problem is usually the UI part since the end-user is either not sophisticated enough or doesn’t want to spend time writing SQL.

We aim to solve this problem with recipes; you model your data once and let your non-technical people to query the data without requiring them to know the data schema. It basically provides a consistent view on your data across all your organization so that there is no room for the mistakes. Looker is one of the pioneer in this area but is highly enterprise-focused so it’s expensive and more importantly have a high learning curve. We hope to cope with this issue with the following approach:

- The data analysts don’t need to learn a programming language and GIT at first, they can model the data using our data modeling user interface.

- If the data models become complex, they can switch to an IDE and write Jsonnet models in VSCode. Jsonnet provides us a safe environment to model the data in a dynamic way, we call it programmable analytics. More info is coming soon! (See github.com/rakam-io/recipes for a sneak peak)

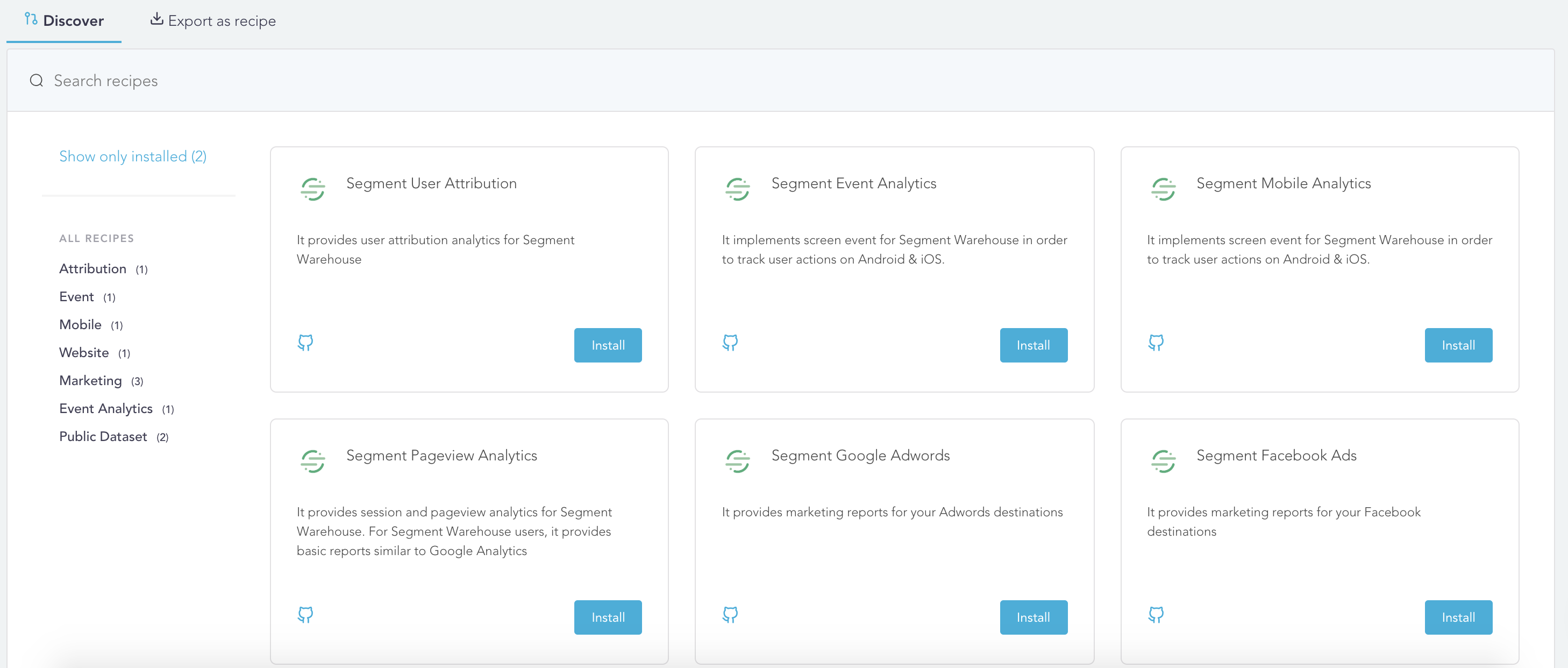

- Finally the open-sourced data models for the integrations that have a well-defined schema. Installing a recipe just a few clicks and most of the recipes come with a handy dashboard that lets you to understand the data in an easy way.

In the first phase, we open-sourced the data models for Segment, Firebase, and Tenjin. If case you want to give it a try here is how it works:

- You connect your database.

- Find the solution that you’re using on recipes and click install.

- Enter the variables that the recipe need. (Such as the database & schema, columns and dynamic values in a solution)

- And click install, boom!

Here are the current integrations, please give it a try and let us know if you have any feedback!